The UK’s Online Safety Bill has now received Royal Assent, which for those of you unfamiliar with the legislative process in the UK, is when the King formally agrees to make the bill into an Act of Parliament (law).

The act aims to make the UK the safest place in the world to be online, addressing multiple online harms including fraud. This was very nearly not the case, with earlier versions of the bill failing to address the role played by social media and search engine platforms in the growth of online fraud.

Crucially, the act requires those platforms to prevent paid-for fraudulent ads from appearing on their services. The importance of this provision cannot be understated, with the recently released UK Finance half-year fraud report reporting that 77% of APP fraud started online. This supports data reported by banking giants such as Barclays that shows 75% of scams take place on social media, auction sites or dating apps.

Given that much of APP fraud appears to start online, this blog will focus on what is expected of the platforms and the consequences if they are found not to have met the requirements of the law. Before we move on to those expectations, it is also worth acknowledging that the online advertising market extends beyond social media and search platforms. The act is also intended to work alongside the Online Advertising Programme that will regulate the wider online advertising ecosystem.

What does the act specifically ask tech platforms to do?

The act requires them to operate their services using “proportionate systems and processes” to:

- Prevent (or minimise in the case of search engines) the chances of a consumer encountering a fraudulent ad,

- Minimise the time the ad is present, and

- Swiftly remove (takedown) the ad once they are made aware of it.

The act sets a lower expectation for search engines with the burden placed on them to minimise rather than prevent. This crucial difference recognises that search engines service up a mix of paid and organic content, with the term “encountering” referring to a user clicking on a paid ad or search return.

We also need to examine why the term “proportionate” is used. Put simply, it enables the platform to consider the nature and severity of potential harm. It also recognises that it may lack control of the content that third-party intermediaries place on its service.

What does the act consider fraudulent?

An ad is considered fraudulent if it meets a few conditions:

- It is a paid-for advertisement,

- It is an offence under the Financial Services and Markets Act, Fraud Act 2006, or Financial Services Act 2021, and

- Isn’t regulated user-generated content (user-generated posts).

It is also worth noting that fraudulent content is considered “priority illegal content” alongside terrorist and child sexual exploitation and abuse content.

How will the act be implemented?

In simple terms, Ofcom envisions implementation comprising three phases:

- Phase One will focus on the causes and impacts of online harms, providing guidance on risk assessment and will set out that what the platforms can do to reduce the risk of harm.

- Phase Two will focus specifically on child safety and the protection of girls and women.

- Phase Three will see platforms categorised using factors such as user numbers and functionality. With those deemed to fit those criteria required to provide greater transparency, more user empowerment, and measures to prevent fraudulent ads.

These three phases are expected to be complete by mid 2025.

What happens if companies do not comply?

The act has an extraterritorial effect and encompasses platforms that have “links with the UK.” This means that any platform with a significant number of users in the UK that seeks to target users in the UK or can be accessed from the UK falls within the provisions of the act.

The UK communications regulator, Ofcom, has been afforded wide-ranging powers to ensure compliance, compelling platforms to meet their obligations and can impose fines of up to £18 million or 10% of the provider's worldwide annual revenue (whichever is higher).

The senior managers of regulated platforms will also be liable for criminal prosecution for failures to comply with Ofcom’s investigation and enforcement powers.

Parallel Worlds of Financial and Tech Companies

If you are familiar with the laws that set out to prevent economic crime, you might well recognise the enforcement approach. Tristan Harris from the Centre for Humane Technology often speaks about the Attention Economy, reflecting his view that the platforms are set up to “maximize engagement.”

When writing this blog, it occurred to me that engagement and money are not dissimilar, as both need to be moved or obtained and are of equal use to criminals. In the parallel worlds of financial services and tech, both have compliance challenges. They now need to demonstrate that they know who their customers are, why and how they are using their services, and when suspected abuse is detected, ensure that they have robust measures to enhance due diligence or potentially exclude them.

Let's take the analogy one step further with a quick example. Money laundering is the act of concealing or disguising the origins of illegally obtained proceeds so that they appear to have originated from legitimate sources. The challenge faced by tech platforms is not dissimilar, with the criminal users of their services also seeking to conceal or disguise their true intentions.

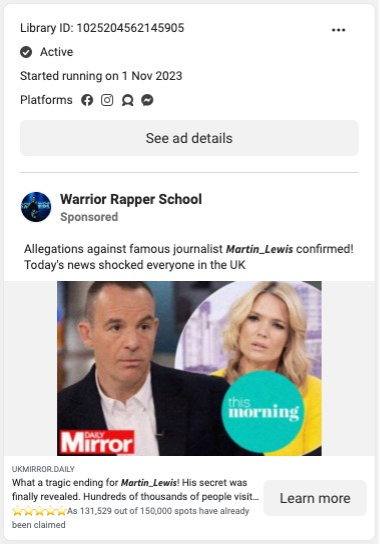

Readers in the UK will be familiar with the journalist Martin Lewis, who has advocated for change in the financial services and tech industries. In the example below, Lewis is the subject of an ad which is designed to create engagement, the ad appears to belong to the “Warrior Rapper School” which claims to be the profile of a Peruvian rapper. Let’s put it down to account takeover (a problem for banks and tech alike).

As with money laundering, the scammer seeks to “disguise” their intentions with the ad pointing to a dot UK domain which, depending on where you’re located, either displays an innocuous webstore or redirects you to a fake news article. An example is shown below.

I’ve decided to call this process engagement laundering - the act of disguising malicious content behind legitimate sources and platforms to drive clicks and responses. The similarities to money laundering brings me to ask an open question, “What can tech companies learn from banks?”

In my opinion, tech companies can learn a lot from their peers in financial services. By working together, there is significant scope for them to accelerate the fight against scams. This need not necessitate the exchange of personal data as anticipated by many but should start with the sharing of strategies to more holistically tackle the Fraud and Anti-Money Laundering (FRAML) risks faced by both industries.