OpenAI recently published its latest in a series of reports on disrupting malicious uses of AI (we covered the previous one in this space back in March). The June edition once again demonstrates how bad actors continue to leverage this new technology to advance their scamming capabilities, improving both the efficiency and apparent authenticity of their outreach.

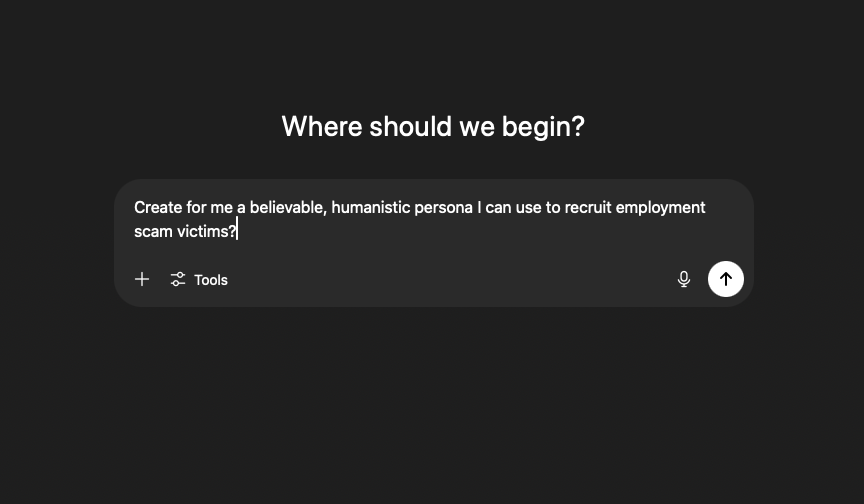

Our last blog on this subject touched upon the criminal use of chatbots to recruit potential employment scam victims. OpenAI now reports bad actors have taken this a step further, using its technology to create entirely new personas, fleshing out believable backstories for their bogus hiring managers.

“We determined that these threat actors attempted to use our models for a deceptive operation. Specifically, they used detailed prompts, instructions, and automation loops to generate tailored, credible résumés at scale.

They also used our models to generate content that resembled job postings aimed at recruiting contractors in different parts of the world.”

AI tools can even automate endpoint configurations, so the accounts receiving the proceeds of these employment scams appear domestic and legitimate, evading security controls.

Defense today, finance tomorrow

Of course, the main event we’re all waiting for is the complete automation of these attacks. OpenAI’s June report highlights the use of its tools for the purposes of intelligence gathering from competing nation-states. While, for now, this objective may remain squarely focused on the defense industry, it’s only a matter of time before it’s realized in the financial sector.

This time, the threat actor was leveraging OpenAI’s model for targeting technical elements in the interest of bypassing security controls, like multi-factor authentication, in the interest of capturing authentication tokens. The tactics range from acquisition of gaps in the control environment to the self-modification of both software and configurations (like firewalls) used to defend the application. These include password-bruteforcing, port scanning, pen-testing, and ultimately acquisition of knowledge around the communications infrastructure upon which our defense operations rely.

“Asking for details on government identity verification and telecom infrastructure analysis … Using LLMs to analyze and summarize vulnerability reports and generate exploit payload ideas … Generating code obfuscation and anti-reverse engineering techniques for malware development …”

We forecast this in our white paper some moons ago. Seeing it realized against the defense industry should send a shiver up your spine. It’s only a matter of time before these approaches are used against our financial services industry, and that’s likely going to happen sooner rather than later.

Task scams upgraded

Lastly, and focused more squarely in the scams space, OpenAI reports bad actors using its tools to advertise and localize task scams (where gig workers are temporarily employed for an opportunity). Scammers text these high-paying job offers to their potential victims via SMS. Inevitably, the hook is high pay for low work. Once they have a fish on the line, the scammer then eases their mark into an investment opportunity. As we’ve noted in recent blogs, investment scammers often send their targets small token payments to generate confidence and enthusiasm in the relationship before harvesting the victim’s account.

“Off-platform reports and conversations in which ‘employees’ demanded refunds indicate that at least some individuals paid these alleged employers. We also observed genuine users defending the companies on social media, suggesting a degree of real-world engagement.”

Confidence is the new vulnerability

The relentless march of automated AI marches on – and so too does the abuse of these first, pioneering GenAI tools. Consumer diligence will certainly capture the vast majority of AI-powered scam attempts, but even if only small, single-digit percentages of these attacks are successful, that’s likely enough of an economic incentive for the bad actors behind them to continue employing, refining, and sharing their scam tactics.

As predicted, scams, social engineering, and malware will always be a use case of merit for this kind of technology and create greater alignment of legitimate activity to nefarious outcomes. While we again salute OpenAI’s internal diligence in defense of this pioneering technology and the altruistic services it will create, as well as the transparency they are providing the industry, these are still early days for artificial intelligence co-pilots and creators. Already, the transformative effects are clear. It’s going to be harder for the average human to detect the machine behind the cursor, if it has malicious intent, or understands more about how to manipulate us by attacking our psychological impulses. Ultimately, we can be confident that we should be less confident in what we see, assume, and know about the counterparty on the other end of the IP address.

Stay tuned.