Most products and technologies are developed for the purpose of making our lives easier. But in some cases, those same products and technologies can also be leveraged for malicious purposes. Such is the case with bots.

Automated scripts can have multiple positive uses that help accelerate processes and drive efficiency. However, when it comes to financial activity that should be executed by humans, the use of automated scripts should not be present. However, criminals are continually leveraging automated bots to perform malicious attacks for account takeover, such as credential stuffing and brute force attacks. More recently, BioCatch has witnessed an unfolding phenomenon of various types of bots being used to commit account application fraud.

How are bots used for account takeover attacks?

With the proliferation of data breaches and phishing attacks, criminals have access to billions of records containing personally identifiable information, including usernames and passwords. With so many credentials to abuse and knowing that most consumers reuse the same password across several accounts, criminals have turned to the use of tools such as STORM, SilverBullet, and SNIPR to validate stolen credentials at mass scale in minutes across multiple websites. When a match is confirmed, a criminal is then able to use the verified credentials to log in and take over the account. These attacks often appear legitimate to Web Applications Firewalls (WAFs) and can evade basic security measures.

Why is bot detection so challenging?

Bot detection is challenging for several reasons. Traditional controls look at velocity of IP use and analyze bot signatures to block bad bots. However, bots can rotate through millions of clean, residential IPs. Often, each IP will send no more than one or two requests before the bot switches to another IP. Many security solutions, such as WAFs, rely exclusively on IP traffic analysis to distinguish bots from humans.

Other solutions look at timing of a session or activity to identify suspicious behaviors. However, bot developers have introduced delays in form fill completion in an attempt to mimic human behavior. With bots impersonating true users, there is a high probability of good customers getting blocked while bots pass through when blocking occurs on the basis of suspicious traffic only.

Does CAPTCHA provide protection against bots?

The industry introduced the CAPTCHA control in the early 2000s as a way to prevent automated attacks. In terms of user experience, consumers have found it frustrating and intrusive, and many website owners are hesitant to use it for this reason as it can lead to website abandonment and decrease customer conversions. But is it effective? Although it has evolved over time, there is still a continuous arms race between bots and CAPTCHA developers. Some CAPTCHAs can be easily solved with more advanced bots, and there are human CAPTCHA farms supporting bot activities in the event that they cannot solve these puzzles.

What are some ways to effectively detect bot activity?

As in all types of fraud MOs, we highly recommend a layered approach. Here are some bot activity identifiers to consider:

-

Spikes in traffic from unknown locations. For example, if your bank doesn’t operate in a certain country or state, but you suddenly receive many requests from unknown regions, there’s a good chance it’s a bot attack. Requests coming from regions that don’t make sense for your business are often bot requests.

-

Abnormal session durations. Bots can complete their task in milliseconds, whereas humans tend to stay for at least a few seconds on a field, but don’t often stay on one page for more than a few minutes. Understanding the common human times and detecting anomalies from a timing perspective is a measure that is commonly used. To understand the effectiveness of this countermeasure, let’s classify types of bots:

| BOT Style | Description |

| Traditional Bot | A script is run through the whole application, with a very fast time to submission and extremely fast key entry on any PI element being recorded. Same Device ID’s and IP’s generally being used one after the other. |

| Periodic Bot | Very consistent keystroke entry on every PI field to make it appear that a human is applying for the account. No rapid-fire data entry. |

| Hybrid Bot | A mixture of robot and human interaction. |

What role does behavioral biometrics play in detecting bot activity?

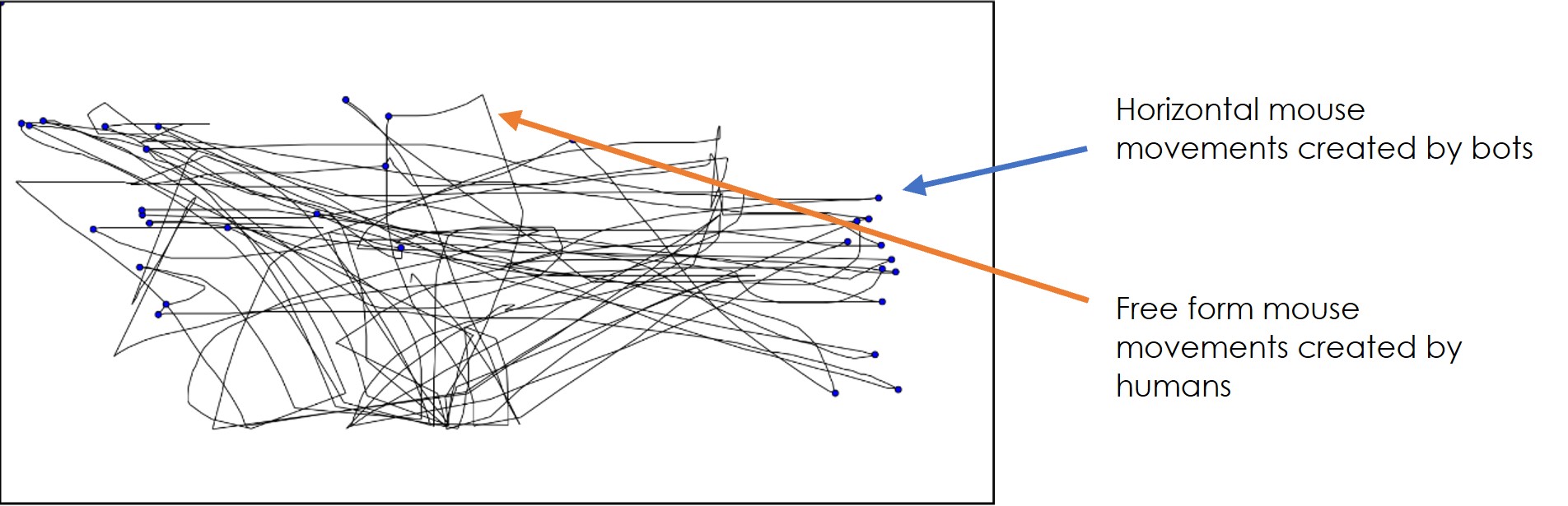

If we look for session time anomalies, or even field completion time anomalies, we might miss the Periodic Bots which introduce time delays on purpose with the intention of mimicking a human. The only way to detect these bots is to continually monitor the user’s behavior on the page, including keystrokes, clicks and mouse movements, and detects the subtle abnormal timing activity. This provides the nuanced level of analysis required to identify those millisecond anomalies. Monitoring session duration or field level completion just won’t cut it. Continuous monitoring with behavioral biometrics is needed to identify every automated activity within an online session.

What are the emerging use cases for the use of bots to commit financial fraud?

One of the fastest emerging trends BioCatch is seeing is the use of bots in the account opening process. With ample funds being available from government stimulus packages and unemployment benefits, criminals claimed these benefits and received their ill-gotten gains in fraudulently opened accounts. To do so, they had to open accounts on behalf of legitimate applicants and funnel the money to these accounts. It was a race against the legitimate beneficiaries and criminals had to hurry.

To aid in the opening of thousands of new accounts, criminals developed Hybrid Bots which allowed some parts of the application to be filled in manually and other parts were completed in an automated fashion. For example, use of a script will generally occur on either the SSN or phone number, while we may see pasting in some other PI fields such as address and keystroke entry in other fields.

The image below shows mouse movement & mouse clicks (blue dots) seen on a Hybrid Bot application from Florida, where many of our U.S. customers saw a spike in new account applications during the pandemic. In the image, there is a mix of free flow movements and clicks as well as very straight horizontal lines across the screen which represent the activity of automated bots.

One reason for the focus on Florida was that the state had increased their unemployment benefits. Since then, we have seen similar patterns in states that had more compelling unemployment benefits than others.

The ability to continually evolve and adjust to stay ahead of fraud MOs is a critical capability for any fraud control. The reality is, if you want to be able to truly distinguish between a human and a bot and decipher legitimate activity vs fraudulent activity, a deep understanding of human behavior patterns is required.

Learn more about how criminals are using bots and other tactics to commit fraud in the account opening process in the new white paper, Account Opening Fraud in the Time of Digital Banking.